Emotion AI has become a gamechanger for enterprises looking to make their customer experiences more positive, personal and competitive in today’s digital-first environment. Using advanced artificial intelligence, pioneered for the enterprise by Uniphore, businesses can now access real-time, actionable insights into what motivates customers and B2B buyers to act. That’s been a boon for individual interactions—but what about group video engagements? Can emotion AI gauge the emotional timbre of, say, a conference room, where different people are feeling and acting in different ways? According to Saurabh Saxena, Vice President of Software Engineering for Uniphore, the answer is a resounding “yes”.

Saxena, who’s led the development of several emotion-based innovations for the enterprise AI leader, is intimately familiar with the technology. One of the biggest challenges, he says, is the data, or rather, the amount of data emotion AI needs to operate effectively and deliver meaningful results. “With emotion AI, there’s a massive amount of data that has to be analyzed, both in real time and post call,” he explains. “For five to six people meeting for more than an hour, it’s about a million and a half rows of data that has to be processed. Each row has about 40 to 50 values.” That’s huge. (By comparison, a 40-minute call using conversational AI must only analyze about 50 rows.)

From facial expressions to emotional data

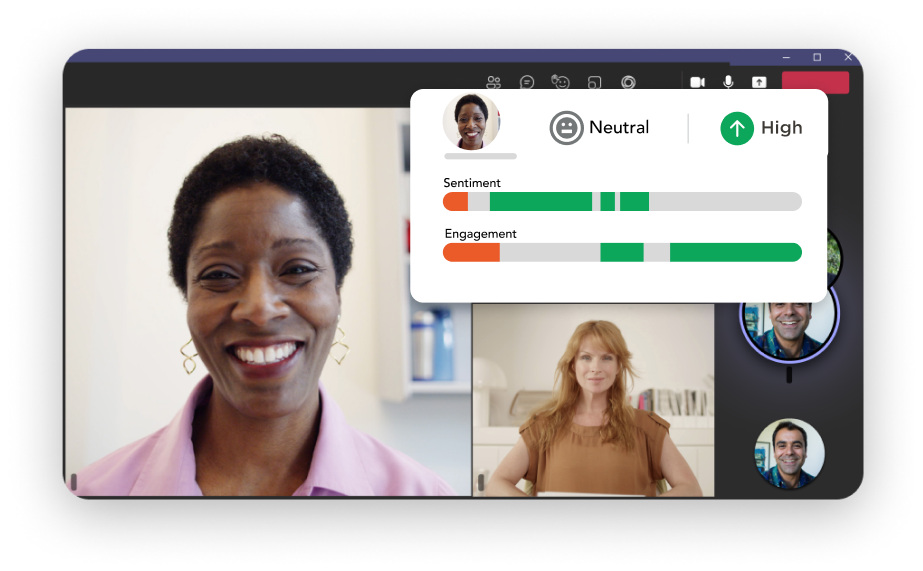

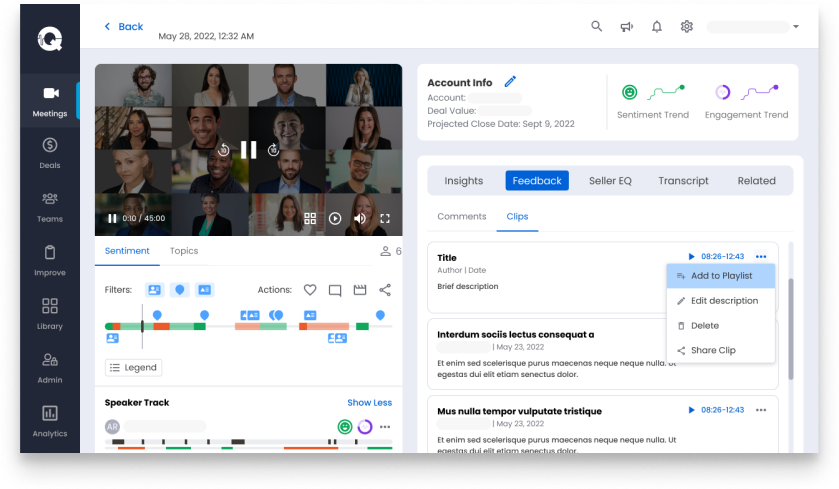

Another challenge is capturing the data. For video interactions, including conference room engagements, Uniphore leverages computer vision to identify participants and analyze their facial expressions. The program captures video tiles for each participant in the room (as well as from any remote participants), tracking their position even if they switch chairs, leave/reenter the room, etc. It then breaks down each video tile into 24 frames per second for analysis. Next, it crops each participant’s face and applies a “mesh” of roughly 20 tracking points to targeted facial areas. That’s when the magic happens.

“We run that through a neural network we’ve trained with about one million images of what is called ‘people in the wild’ with various emotions,” Saxena explains. “Using those tracking points, we measure the position of facial muscles for each instant against a psychological analysis model to glean the actual emotion.”

That model follows psychology’s definitive emotional yardstick. “We measure emotion using the Ekman Model, which is considered the baseline study for behavioral science,” says Saxena. “Ekman’s model says that human emotion can be summarized into six basic emotions—fear, anger, joy, sadness, disgust and surprise—and 200 secondary emotions. We train [our emotion AI] on the Ekman Model to find the raw emotion. We then look at how deep that emotion is.”

To determine how deep a detected emotion is, Saxena’s team zeroes in on two key parameters: valance and arousal. Valance indicates the degree of an emotion, whether positive or negative. Arousal indicates the intensity of that emotion and is represented as high, medium or low. Together, these parameters can give an accurate assessment of how a meeting participant is feeling—and how intense that feeling is—at any given moment.

Saxena, however, is quick to point out that emotions don’t exist in a vacuum. In a meeting environment, they are largely reaction driven. A presenter might say something that rubs a participant the wrong way, for instance. While that participant might not verbalize their displeasure, their expressions may reveal how they feel. And while one participant’s negativity doesn’t necessarily doom a meeting to fail, if multiple participants show the same negative signs, it could indicate that it’s time to change tactics or direction.

To gauge the emotional state of a group, emotion AI looks for what is called mirroring of emotions. “We look for durations of time in a conversation when more than one person was showing a negative emotion, for instance,” says Saxena. “If we identify a negative emotion on one person, we will ask ourselves if, during this time, was somebody else also nearing that emotion?”

Capturing emotional data from multiple sources

Facial analysis, however, is only part of the emotional equation. Humans, after all, are highly complex communicators. We express how we feel in multiple ways or modalities. To capture the full scope of how a person—or a group—is feeling, emotion AI must capture, analyze and fuse together emotional data from a variety of sources. That’s been a challenge for AI developers—until now.

“What makes our emotion AI unique is that it is multimodal fusion-based emotion AI,”

Saurabh Saxena, Vice President of Software Engineering

Unlike simpler programs that focus on one or two emotion indicators, Uniphore’s emotion AI analyzes multiple benchmarks and fuses those results together to paint a highly detailed picture of a person’s emotional state. In addition to facial analysis, the program analyzes body language, sentiment, back channel and talk-overs, disposition and, of course, tone.

“We apply a similar approach to tonal sentiment analysis,” Saxena says. “[Using] Ekman’s Model, we dial it down to valance and arousal (intensity). We also look for empathy, politeness, talk speed and hesitation personality traits. With empathy, when a transition happens from one speaker to another, [we ask] ‘am I mirroring your emotion or not?’ You may be negative. I may be joking. That’s lack of empathy.”

To add another layer of accuracy, Uniphore’s emotion AI also looks at the words meeting participants use. By converting participants’ speech into text, the program can then perform sentiment analysis around valance and arousal based on the words being used. It also looks for signs of empathy, politeness, talk speed and hesitation throughout the written conversation.

Getting the full picture of emotion

How does Uniphore analyze all these emotional modalities at the same time? “We have nine to ten neural networks that are running in conjunction to enable all of this,” Saxena explains. “We take these three signals (computer vision, tone and text) and try to produce a single value for emotion. That’s interesting because your voice may be happy, but your expression may be angry. So, we’ve built this mathematical model that allows for these different emotions to get fused into a single value by itself.”

The solution even accounts for variances in personality from person to person. “There are people who are constantly smiling and there are people who just have a frown on their face,” Saxena continues. “What fusion does is establishes a baseline for each one, and says, ‘I think for this person, neutral is a little higher than normal or neutral is a little lower than normal.’ Then, when we do the sentiment analysis, it’s on different baselines. So, we personalize the emotion range for each person.”

The program also looks at critical topics during the duration of a meeting and ranks segments according to whether they seem relevant to the conversation. For example, it will rank casual chitchat and banter lower than topical discussions, so the sentiment from one segment doesn’t skew the overall meeting analysis.

All of this—the multimodal emotional analysis, the baseline correcting, the conversational segment ranking—is done in real time. “It really executes at the speed of light,” says Saxena. “We are processing thousands and thousands of data points in microseconds.” Capturing and processing that much data would have been unthinkable even a few years ago. However, the technology has changed dramatically—and so too has the need. With more group engagements happening over video, remote presenters need all the help they can get to understand how participants feel and to keep them engaged. With emotion AI, they can present like they’re standing in the conference room—even if they’re thousands of miles away.

Want to learn more about emotion AI?

Read how Uniphore transforms emotion into actionable intelligence here. To learn more about how emotion AI can optimize group engagements, including sales calls and conference room meetings, contact our team of experts.